Top 9 Open-source AI Agent Frameworks for 2025

AI agents are changing the entire landscape of diverse industries. The health, e-commerce, law, and supply chain industries are adopting AI agents to automate tasks, create innovative solutions, scale, and enhance their business and operations.Within the next five years, AI agents could be present in each and every sector we know today. Since not every […]

AI agents are changing the entire landscape of diverse industries. The health, e-commerce, law, and supply chain industries are adopting AI agents to automate tasks, create innovative solutions, scale, and enhance their business and operations.

Within the next five years, AI agents could be present in each and every sector we know today. Since not every agent plays the same role or does the same thing, developers undergo complex processes to build AI agents tailored to specific use cases.

However, the emergence of agentic AI frameworks made the process much easier and faster. Now, developers can build various AI agents faster, with less hassle, following best practices with the help of an AI agent framework. These frameworks serve as a foundation for building scalable and efficient AI systems.

We reviewed nine of the best open-source AI agent frameworks, examining their key strengths, use cases, and limitations. We focused on evaluating features like multi-agent orchestration, modalities, and LLM functions.

Benefits of AI frameworks

An AI framework is a set of libraries, datasets, tools, and functionalities required for developing an AI system. These frameworks form a digital ecosystem that serves as a structured foundation for creating personalized AI agents for various use cases.

The pre-built collections of tools and frameworks ease the hassle of complex algorithms, helping enterprises and developers focus on innovation rather than tedious tasks.

We can compare it to a drag-and-drop website template, where behind-the-scenes technical tasks are taken care of, allowing users to build a tailored website with easy-to-use tools. Let’s break down the three key benefits that AI frameworks offer: faster development, scalability, and sustainability.

1. Faster Development

Building AI agents from the ground up takes a considerable amount of time. While the Agent framework doesn’t help you complete tasks in minutes, it does make the process significantly faster. Developers get pre-built tools, models, and libraries that save time spent on developing algorithms, debugging, and writing custom code.

2. Scalability

A business requires AI systems that can scale with the growth of users and data. Developers can easily build scalable AI agents with AI frameworks. Typically, the open-source AI frameworks are equipped with the best practices and relevant architecture. This saves the developers from the confusion of what to include and how to design a well-structured, scalable pipeline. Some frameworks enable distributed computing, which helps distribute work across several servers or machines. This allows your AI agents to handle more workload as the business grows.

3. Sustainability

AI frameworks allow businesses to become less resource-intensive while developing and updating their AI agents. Using the ready-to-implement tools and modalities, developers can optimize their processes, use fewer resources, and conserve energy.

For deeper insights into AI agents, read through our comprehensive AI agent guide.

Core functions of AI frameworks

AI frameworks come with many functionalities and tools to help design a well-structured machine learning process and develop AI agents with ease. Not all frameworks offer the same elements, but some common functions of AI frameworks are:

- Agent Architecture

- Environmental Integration Layer

- Task Orchestration Framework

- Communication Infrastructure

- Performance Optimization

Agent Architecture

Agent architecture is a combination of logic and models that defines how AI should think and behave with its environment. This architecture will instruct the AI on how to interpret input, make a decision, and deliver the output. Let’s say the agent architecture of a self-driving car defines how to take data from cameras and sensors (input) to control specific outcomes, such as speed, direction, and safety.

Environmental Integration Layer

The Environmental Integration Layer connects the AI with the surrounding environment and helps it receive and understand real-world data. Let’s say a smart home system receives help from its environmental integration layer to gather input from thermostats and decide whether to turn the lights on or off.

Task Orchestration Framework

A task orchestration framework enables AI to complete tasks in the correct sequence and context. For example, in an AI chatbot, if someone asks what the sum of 4+7 is and whether it’s greater than 10. The correct sequence would be to add up the numbers and then compare the result with ten. An orchestration framework maintains this sequence.

Communication Infrastructure

The communication infrastructure enables an AI agent to exchange information with its modules, other elements, other AI agents, or with external systems. Without communication, multi-agent systems, in which a number of different AI agents work together cannot function.

Performance Optimization

Performance optimization helps the AI agent improve performance. This can be achieved by optimizing speed, efficiency, and resource utilization. AI agents are typically expected to scale and perform efficiently when a system is handling more data.

Let’s explore the best open-source AI frameworks and analyze their use cases, modalities, and key strengths to find the most suitable AI solution for your needs.

Frameworks Overview

1. GraphBit

GraphBit is an open-source LLM agent framework that empowers developers with speed, security, and scaling functionalities for developing AI agents. This framework is the world’s first Rust core & Python-wrapped Agentic AI framework, which uniquely sets GraphBit apart from its competitors. GraphBit combines Rust-level efficiency with Python-level ergonomics, delivering a reliable AI framework for your machine learning pipelines.

Key Strengths

- LLM-Powered Workflows: GraphBit empowers developers to seamlessly integrate language models (LLMs) within their workflows. It provides multiple LLM support, including OpenAI, Anthropic, and Ollama. This allows developers to select the best model suited for each specific task and manage costs by using a mix of expensive and cost-effective models.

- Parallel Orchestration Engine: GraphBit introduces the world’s first concurrent and parallel framework, which allows multiple tasks to run simultaneously, at the same time boosting efficiency in turn.

- Serialization and Deserialization Optimization: This process converts data into a readable format for each AI agent, enabling AI elements to communicate with one another. GraphBit uses ultra-low latency to make this process faster.

- ARC Memory Management: Since everything runs concurrently and in parallel in GraphBit, it’s expected to see a system crash or errors. Yet, thanks to ARC Memory Management, GraphBit ensures that all processes working simultaneously are functioning as expected without interfering with each other’s tasks.

- Enterprise-grade Security: GraphBit presents built-in encryption and compliance-ready modules to ensure enterprise-grade security. This allows organizations to comply with necessary legal and industry standards for data privacy and protection.

Benchmarks

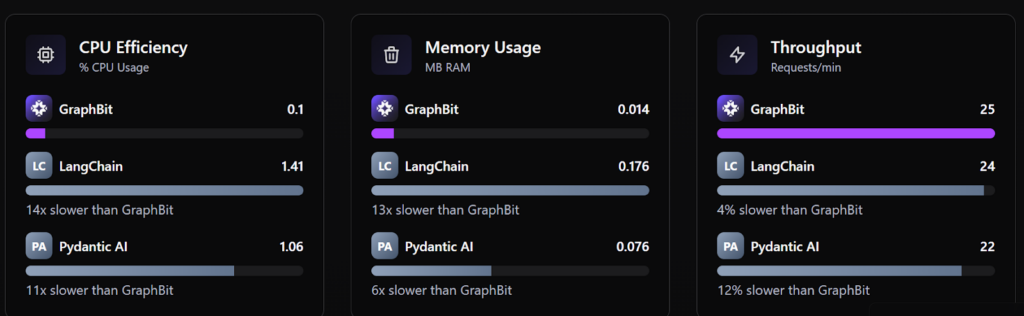

Here are some real-world benchmarks assessing GraphBit’s performance against its major competitors: LangChain and Pydantic AI. These official benchmarks demonstrate that GraphBit outperforms its competitors by 92%, with a low error rate of 0.176%.

CPU Efficiency

- GraphBit is 1400% more efficient than LangChain.

- GraphBit is 1100% more efficient than Pydantic AI.

Memory Usage

- GraphBit uses 1300% less memory than LangChain.

- GraphBit uses 600% less memory than Pydantic AI.

Throughput (Requests per Minute)

- LangChain is 4% slower than GraphBit

- Pydantic AI is 12% slower than GraphBit

Use cases

GraphBit caters to the needs of developers, startups, and enterprises who want to build custom AI agents.

Limitations

- Although the concurrent and parallel architecture is highly beneficial for large-scale AI workflows, it could also be resource-heavy.

- The developers need to be familiar with Rust or advanced Python tooling.

2. LangChain

LangChain is one of the most popular AI agent frameworks that allows developers to build LLM‑based applications and AI agents with any model provider of their choice. It offers high visibility and controls to developers, allowing them to see what’s happening at each step of their agent.

Key Strengths

- Broad ecosystem of connectors and templates: LangChain’s vast ecosystem of connectors and templates allows developers to plug and play with LLMs, vector stores, file loaders, tooling APIs, etc. This allows developers to pick a model provider (e.g, OpenAI, Anthropic, HuggingFace, or local models) or tools that fit their stack.

- Strong community and enterprise adoption: LangChain also has a strong and active community. It’s one of the most popular open-source LLM frameworks on GitHub which is adopted by enterprises like Replit, Clay, Rippling, Cloudflare, and Workday.

Use Cases

LangChain’s use cases include developing AI assistants, document analysis, recommendations, and research.

Ideal Users

LangChain is suitable for both mature corporations and startups that want to build proof-of-concept or production-grade assistants.

Limitations

- LangChain’s complex workflows can often cause latency/cost overhead.

- Large projects may experience high compute and memory demands.

- Developers can face significant architectural complexity.

3. CrewAI

CrewAI is an open-source framework that allows developers to build multiple AI agents that collaborate as a team to solve complex AI workflows. It’s not built on top of other workflows, like LangChain; instead, it’s built from scratch in Python.

Key Strengths

- Agent Collaboration: CrewAI allows developers to build collaborative AI agents, each with distinct roles, specific skills, tools, and goals. CrewAI’s built‑in collaboration tools, such as the Delegate Work Tool, Multi‑agent coordination, and the Ask Question Tool help AI agents communicate and interact with each other, facilitating teamwork. This functionally helps build different AI agents with different focuses, ensuring each agent focuses on what it’s good at. For example, an agent can assign tasks to other agents using the Delegate Work Tool, and ask questions to another team agent using the Ask Question Tool

- Task sharing: CrewAI allows functionalities like task abstraction and task chaining to break down a big task into chunks and allocate unique roles to a specific AI agent. This improves throughput and traceability of functions, helping to optimize the pipeline better, even when these AI agents work collaboratively.

- Lightweight and Developer-Friendly Interface: CrewAI is designed to be intuitive for developers. They can start with simple workflows and gradually transition to more complex systems. CrewAI is also lightweight and fast since it’s not built on any large frameworks or dependencies.

Limitations

- CrewAI is best for multi-agent coordination. For simpler tasks, tools like CrewAI are unnecessary.

- The community is growing, but it’s still in its early stages, where not many resources are available.

- Not many built-in templates

Best for

CrewAI is a good option for startups that need frameworks for multi-agent or human-AI cooperative systems.

Use Cases

CrewAI could be used for creating virtual assistants, collaborative chatbots, fraud detection/risk workflows, and personalised learning/tutoring systems.

4. AutoGen

AutoGen is a Microsoft-built framework that is designed to create multi-agent workflows that work with LLMs, memory, and tools. Like CrewAI, it also allows collaboration between multiple agents.

Key Strengths

- Accessible for non-experts: AutoGen Studio introduces a no-code/low-code interface where agent-based workflows can be built with minimal technical skills. This is great for those who want to develop AI agents without the extensive knowledge of AI technology.

- Seamless integration with Microsoft Azure and OpenAI APIs: Developers can utilize their existing infrastructure and security protocols within workflows, as AutoGen seamlessly integrates with the Microsoft ecosystem, Azure, and OpenAI APIs.

- Ease of use and enterprise-ready security: A multi-agent workflow can be complex to build; however, AutoGen’s detailed documentation and enterprise-grade security practices enable teams to quickly kickstart and build enterprise-level applications.

Use Cases

AutoGen can be used for automated code generation, agent-based workflow construction, research, summarization, and document understanding.

Ideal Users

AutoGen caters to the needs of developers working with Microsoft Azure or those who prefer .NET or Python. However, it features a low-code interface, making it suitable for anyone who wants to develop AI agents but lacks core development experience.

Limitations

- AutoGen might not be the best solution if you’re looking for high flexibility for building custom architectures.

- While it’s great for developers working with the Microsoft ecosystem, these integrations are limited and could be considered a challenge for those working with other platforms or requiring custom integrations.

5. Langflow

Langflow is designed to build Retrieval-Augmented Generation (RAG) applications and multi-agent systems using its open-source, low-code ecosystem. Developers can build complex workflows with more flexibility and less coding, integrating LLMs, vector databases, and external APIs.

Key Strengths

- Visual Builder: Langflow’s visual builder makes workflow creation quite effortless, even with several complex steps, LLMs, and data sources. The visual builder helps speed up the process, eliminating the need to code from scratch.

- Model-Agnostic: Langflow allows developers to work with a variety of LLMs instead of specific providers. This is a flexibility that allows developers to switch models or data sources according to their needs easily.

- Multi-Agent Orchestration: Like CrewAI and AutoGen, Langflow enables the creation of multiple-agent collaboration, where a number of agents can be interconnected in a pipeline to solve complex tasks.

Limitations

- Although the visual interface simplifies development, the early users may still experience a learning curve in Langflow while building advanced workflows.

- The visual builder offers limited control over agent behaviors, model selection, or deep workflow customization; these advanced tasks might require developers to code.

Ideal for

Langflow is best for building teams and developers who want rapid prototyping of RAG with multi-agent systems, including document understanding and retrieval functionalities. It’s also a good option for teams with non-technical members who wish to have a low-code interface with the ability to create powerful AI agents.

Use Cases

Langflow can be used to create rapid prototyping of chat or reasoning agents, educational and enterprise experimentation environments, and visual workflow design for multi-agent systems.

6. Semantic Kernel

Semantic Kernel is another Microsoft-developed open-source framework, similar to AutoGen, that helps integrate AI capabilities into traditional software applications. The developers can orchestrate multi-agent workflows leveraging LLNs and built-in AI tools.

Key Strengths

- Multi-Language Support (Python, C#, Java): Semantic Kernel’s language-agnostic approach allows developers to work with languages like Python, C#, and Java. This way, developers can select suitable options according to their stack and build diverse systems, ranging from new applications to legacy systems.

- Enterprise-Grade Security and Scalability: Semantic Kernel’s enterprise-level security and scalability allow for secure agent orchestration and AI model integration. With its help, developers can build large-scale workflows and scale as needed.

- AI Model Orchestration: Developers can build advanced systems with multiple AI agents and execute tasks in parallel or sequentially using the Semantic Kernel.

- Seamless Integration with Legacy Systems: Semantic Kernel allows developers to plug into legacy systems instead of rewriting the entire backend in a new technology.

Ideal for

Enterprises that want to incorporate AI into existing software infrastructure and ensure data security, scalability, and robust orchestration.

Use Cases

Semantic Kernel can be used for developing chatbots, automation, semantic search, and AI productivity tools.

Limitations

- Users may experience a steep learning curve when configuring orchestration.

- Heavier setup for non-Microsoft environments; learning curve for orchestration configuration.

7. Atomic Agents

Atomic Agents helps developers build decentralized, autonomous multi-agent systems leveraging their open-source framework. With this multi-agent framework, developers can create a single agent for each atomic task, and those agents collaborate in a workflow to handle complex tasks.

Key Strengths

- Decentralized and Autonomous Agents: Atomic Agents allows building decentralized and autonomous agents, where each function independently and does not rely on a central server. This enables distributed decision-making while significantly reducing the risk of failure.

- Cooperative Task Handling: Developers can create agents that focus on distinct and specialized roles. This allows easy replacements and upgrades without requiring changes to the entire system. The agents can also cooperate and communicate with each other to perform complex tasks together.

- Lightweight and Scalable: The ecosystem can handle a large number of agents without major overhead. Atomic Agents is lightweight and scalable, which is ideal for distributed environments.

Limitation

Although the framework is flexible enough, it could be hard to figure out in the initial stage, especially for those who don’t have a deep understanding of multi-agent system design and coordination.

Ideal for

Atomic Agents is best for organisations that want to scale their AI systems that operate in distributed environments. This framework focuses particularly on robotic process automation and multi-agent decision-making systems.

Use Cases

Atomic Agents can be used for building AI systems like Robotics and IoT, decentralized data analysis, network monitoring, and simulation of multi-agent ecosystems.

8. RASA

RASA is an open-source framework designed to build conversational AI agents like chatbots and virtual assistants with a natural language processing (NLP) pipeline. With features like intent recognition, entity extraction, and dialogue management, developers can create interactive conversational agents with RASA.

Key Strengths

- Comprehensive NLU and Dialogue Management: RASA’s natural language understanding (NLU) and dialogue management functionalities allow developers to design conversations where the AI can understand users’ intent and needs and manage to perform back-and-forth conversations.

- Hybrid Rule-Based + ML Approach: RASA follows a hybrid approach where rule-based logic combines with machine learning models, allowing developers to customize conversation flow. In this model, AI can understand and handle complex user queries and adjust its responses accordingly.

- Multi-Channel Support: RASA supports integration with many messaging platforms, including Telegram, Slack, and Facebook Messenger. This allows developers to create AI agents for diverse channels.

- Enterprise-Level Security and Scalability: RASA’s role-based access control, data encryption, and scalable architecture allow enterprises to leverage the framework to handle their growing needs as well as sensitive data.

Limitations

- Developers may need to have a deep understanding of both machine learning and NLP to build advanced systems with RASA.

- Training custom ML models for large datasets or complex dialogue flows could be resource-intensive.

Ideal for

RASA caters to the needs of highly experienced teams with advanced technical knowledge who are capable of making scalable, highly customizable chatbots and virtual assistants.

Use Cases

RASA can be used to develop customer support chatbots, HR assistants, internal chatbots, and multi-channel conversation systems for websites, mobile apps, and social media.

9. Hugging Face Transformers Agents

Hugging Face Transformers Agents is designed to build AI agents with transformer-based models like GPT, BERT, and T5. The developers can leverage dynamic model orchestration, where multiple models can cooperate to handle NLP tasks.

Key Strengths

- Dynamic Model Orchestration: Hugging Face presents dynamic model orchestration, where multiple transformer models can be integrated within a single agent.

- Fine-Tuning Flexibility: This framework also allows developers to fine-tune and customize pre-trained models. This functionally helps boost the performance and create customized models allocated for specific tasks.

- Powerful NLP Capabilities: Hugging Face comes with robust NLP features like text generation, summarization, question answering, and classification, making it suitable for many NLP use cases.

Use Cases

Hugging Face Transformers Agents can be used to build AI agents like generative AI for e-commerce, news, or creative industries, AI-powered chatbots and recommendation engines for e-commerce platforms, healthcare, and legal applications for automated document analysis, contract review, etc.

Ideal for

Hugging Face is well-suited for organizations that want to build LLM-based agents and developers who seek fast prototyping and deployment of AI workflows.

Conclusion

Our in-depth analysis of the nine best open-source AI agent frameworks can educate you about the top solutions available on the market. While each framework in our list works better with particular use cases, GraphBit is our best pick as it’s suitable for all developers and organizations of any size who want to build custom AI agents.

GraphBit stands out with its high concurrency and low resource usage, which makes it suitable for any large-scale development. Plus, its Rust and Python architecture acts as a defining feature, further optimizing the framework’s efficiency and robustness. GraphBit also has a proven track record that is even better than popular solutions like Langflow, AutoGen, or Rasa. If you’re looking for a high-performance AI agent framework solution that can power your open-source multi-agent AI frameworks, try GraphBit.

FAQs

- What is the best framework for AI agent?

GraphBit is the best AI agent framework as it’s designed with Rust wrapped in Python, a hybrid structure that allows the development of AI agents with speed, security, and scalability.

- What is the best AI agent platform?

GraphBit’s hybrid structure, combining Rust and Python, its parallel orchestration engine, and enterprise-grade security, make it the best AI agent platform.

- What is the best AI framework?

According to real-world insights, Graphbit outperforms big names like LangChain and Pydantic AI, boasting a 92% improvement and a low error rate of 0.176%, making it the best AI framework on the market today.